Ansible Collections

Make integration tests for your Ansible Collection fast

March 24, 2020 - Words by Tadej Borovšak - 6 min read

Tests are great. Especially integration tests. But we need quite a lot of them to cover the codebase. And because we want to run them as often as practically possible, we must make sure our test suite is fast. What follows is a story of how Steampunks made Sensu Go Ansible Collection’s test times as short as possible.

In the beginning, there was …

… a primordial soup of directories ;) We knew how to layout module tests,

and we knew how role tests should be structured. Since our collection

contained both, we just stuck those two sets of tests into a

tests/integration directory and called it a day. What we ended up with is

the following directory tree:

tests/integration/

├── modules

│ └── molecule

│ ├── asset

│ ├── check

│ ├── ...

│ ├── socket_handler

│ └── user

└── roles

├── agent

│ └── molecule

│ ├── reference

│ └── shared

├── backend

│ └── molecule

│ ├── reference

│ └── shared

└── install

└── molecule

├── centos

├── shared

└── ubuntu

It was a bit annoying having to change directories when running different tests, but overall, things worked well. And the CI liked this test structure as well. We created a separate job for each of the Molecule’s scenario set, and the CircleCI happily executed them in parallel.

But once the honeymoon period was over, we started to notice some flaws with our setup. Because we placed Molecule scenarios into different directories, we were forced to duplicate the configuration for each scenario set. And while we were able to reduce the duplication by using symbolic links, things still did not feel right.

What we also noticed is that our scenarios for testing modules started dominating the CI run time. And things were only getting worse as time passed. Each new Ansible module pair that we wrote added almost two minutes of test time. Unacceptable!

Also, it was annoying to change directories when testing different parts of our Ansible Collection. We already said that? Well, then it must have bothered us more than we were willing to admit ;)

So a few months back, we started fixing things.

Flattening the test suite

To make tests more pleasant to run, we first moved all Molecule scenarios into a single directory. What we ended up with is this directory structure:

tests/integration/

└── molecule

├── module_asset

├── module_check

├── ...

├── module_socket_handler

├── module_user

├── role_agent_default

├── role_backend_default

├── role_install_custom_version

└── role_install_default

During the process of moving the test scenarios into a single directory, we also removed most of the duplicated configuration. And it felt great ;)

Now we were able to run any test by moving into the tests/integration

directory and running molecule test -s <scenario>. Or all of them by running

molecule test --all.

That last command is not as innocent as it may seem. Why? Because it runs all of the scenarios one after another, which is painfully slow. But we had a trick up our sleeve: executing multiple Molecule instances in parallel.

to rescue! Parallelization the

Now, before we were able to start running multiple Molecule instances in

parallel, we needed to make sure that they will not stomp on each other’s

state. And thankfully, Molecule already had a solution for this problem: the

--parallel command-line switch. When we run Molecule with that switch turned

on, it will store its state in a randomly-named temporary folder. Problem

solved.

But we still needed to solve the problem of how to run the Molecule instances

in parallel. And our poison of choice for this kind of task is good old

make. It just felt right to use make to make tests fast ;)

So what we did is write a short Makefile:

modules := $(wildcard molecule/module_*)

roles := $(wildcard molecule/role_*)

scenarios := $(modules) $(roles)

.PHONY: all

all: $(scenarios)

.PHONY: modules

modules: $(modules)

.PHONY: roles

roles: $(roles)

.PHONY: $(modules) $(roles)

$(modules) $(roles):

molecule -c base.yml test --parallel -s $(notdir $@)

This Makefile allows us to run a test scenario molecule/<scenario> by

executing make molecule/<scenario>. We also added a default target for

running all tests at once. And the best part? Now that we have this, we can

parallelize test execution by adding -j<n> parameter to the make

invocation. Set <n> to 4 and make will run four tests concurrently. Magic.

By default,

makewill intersperse output from the commands that it is executing in parallel. If you need to have a readable output, you should add a-Oparameter to themakeinvocation, forcing it to group the output per target.

This way, we solved the problem of long-running tests on development computers. But we still needed to deal with the CI system. Why? Because development and CI environments tend to be quite different.

When we are developing locally, we usually use a single beefy machine with plenty of processor cores and memory chips, while in the CI environment, we typically have a few smaller (virtual) computers. What this means is that running multiple tests in parallel is no big deal on a local machine, but we need to spread the workload over multiple computers in the CI environment.

So it was time to science the crap out of this problem ;)

NP-what!?!

If we want our tests to finish in the shortest time possible, we need to decide which test scenarios each of the CI workers should run. Sounds easy, right? Well, it is not easy. It is NP-hard . Yeah, that hard.

But luckily for us, we do not need an optimal solution. It-sucks-but-not-too-much is just fine for our use-case ;) And this is why we can use something called Longest Processing Time heuristic to construct a decent schedule in almost no time at all.

There is one important caveat here: our scheduling algorithm should be completely deterministic. Why? Because we will be calculating schedules on every CI worker. If the scheduling implementation places one scenario in different queues on different CI workers, our CI might end up not running specific tests, which defeats the purpose of running our tests in the first place.

And we are almost done. All we need to do is measure the time required to run

each of the scenarios. And thanks to the time command, this is as easy as

running /usr/bin/time -f "$s %e" make <scenario> for each scenario.

Now that we had all of our pieces of functionality gathered, it was time to put them together.

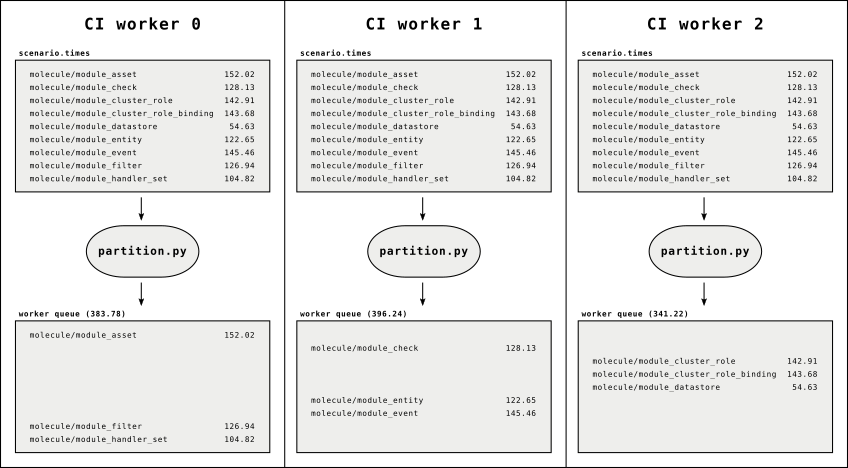

The full circle(CI)

The first thing we did was write the timing script that measures the time it takes to execute each Molecule scenario, as described in the previous section. We store the results of this script in a file since the timings do not need to be adjusted often.

Next, we wrote the

partitioning script

that takes the scenario run

durations and partitions them into several queues. The partitioning script

uses the CIRCLE_NODE_TOTAL environment variable to determine the number of

partitions it should produce and then outputs the scenarios from the

CIRCLE_NODE_INDEX one. This way, when we run our test on

CircleCI

using

multiple workers, each one gets its own dedicated set of scenarios.

A visual representation of test partitioning.

To bind all of this together, we added a Makefile target that executes the partitioning script. And we were finally done.

Takeaways

And this is it. It has been quite a journey getting this far, but what we ended up with is a battery of tests that is easy to execute manually and is parallelization-aware. As a bonus, when we add a new testing scenario to the collection, the scheduling will automatically adjust itself.

But this auto-tuning is not entirely free (nothing ever is): now we need to maintain our scenario timings. But this is a minor inconvenience compared to the task of manually keeping the schedules up-to-date.

If you are interested in more details, feel free to browse the sources in the Sensu Go Ansible Collection repository that is hooked-up to the CircleCI . You can also get in touch with us on Twitter or Reddit .

Cheers!